ALEXA VS. ALEXA —

Stylish “tidy” instrument follows instructions issued by its personal speaker. What would possibly per probability moreover depart horrid?

Dan Goodin –

Amplify / A neighborhood of Amazon Echo tidy audio system, alongside facet Echo Studio, Echo, and Echo Dot fashions. (Photograph by Neil Godwin/Future Publishing by contrivance of Getty Photographs)

T3 Journal/Getty Photographs

Tutorial researchers personal devised a recent working exploit that commandeers Amazon Echo tidy audio system and forces them to launch doorways, assemble telephone calls and unauthorized purchases, and retain a watch on furnaces, microwave ovens, and fully completely different tidy home equipment.

The assault works by the utilization of the instrument’s speaker to self-discipline inform instructions. As extended because the speech incorporates the instrument wake phrase (in total “Alexa” or “Echo”) adopted by a permissible current, the Echo will elevate it out, researchers from Royal Holloway Faculty in London and Italy’s Faculty of Catania found. Even when units require verbal affirmation ahead of executing smooth instructions, it’s trivial to keep away from the measure by including the phrase “sure” about six seconds after issuing the current. Attackers can moreover exploit what the researchers name the “FVV,” or paunchy inform vulnerability, which allows Echos to assemble self-issued instructions with out fleet decreasing the instrument quantity.

Alexa, depart hack your self

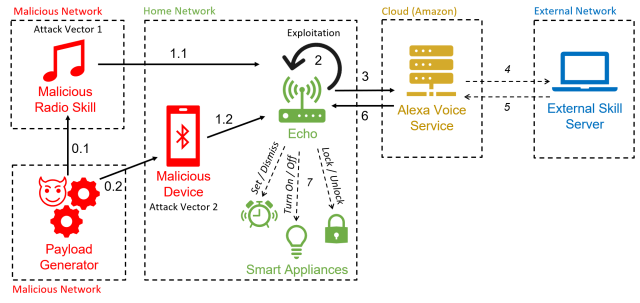

For the reason that hack makes use of Alexa performance to drive units to assemble self-issued instructions, the researchers personal dubbed it “AvA,” quick for Alexa vs. Alexa. It requires easiest only a few seconds of proximity to a weak instrument whereas it’s turn out to be on so an attacker can inform a inform current instructing it to pair with an attacker’s Bluetooth-enabled instrument. As extended because the instrument stays inside radio differ of the Echo, the attacker will probably be able to self-discipline instructions.

The assault “is the foremost to train the vulnerability of self-issuing arbitrary instructions on Echo units, allowing an attacker to govern them for a prolonged period of time,” the researchers wrote in a paper revealed two weeks in the past. “With this work, we clutch the need of getting an exterior speaker shut to the objective instrument, growing the final chance of the assault.”

A variation of the assault makes use of a malicious radio area to generate the self-issued instructions. That assault is rarely any longer that chances are high you may per probability per probability think of throughout the vogue proven throughout the paper following security patches that Echo-maker Amazon launched per the examine. The researchers personal confirmed that the assaults work in opposition to third- and 4th-period Echo Dot units.

Esposito et al.

AvA begins when a weak Echo instrument connects by Bluetooth to the attacker’s instrument (and for unpatched Echos, as quickly as they play the malicious radio area). From then on, the attacker can train a textual say-to-speech app or fully completely different method to stroll inform instructions. Right here’s a video of AvA in motion. Your whole diversifications of the assault stay viable, apart from what’s proven between 1: 40 and a pair of: 14:

Alexa versus Alexa – Demo.

The researchers found they’d possibly per probability per probability train AvA to drive units to fabricate a bunch of instructions, many with severe privateness or security penalties. That chances are high you may per probability per probability think of malicious actions embody:

- Controlling fully completely different tidy home equipment, resembling turning off lights, turning on a tidy microwave oven, setting the heating to an unsafe temperature, or unlocking tidy door locks. As famed earlier, when Echos require affirmation, the adversary easiest needs to append a “sure” to the present about six seconds after the examine.

- Title any telephone amount, alongside facet one managed by the attacker, in order that it’s that chances are high you may per probability per probability think of to listen in on close by sounds. Whereas Echos train a light-weight to point out that they’re making a name, units will not be persistently seen to clients, and not extra skilled clients would possibly per probability moreover merely not know what the sunshine method.

- Making unauthorized purchases the utilization of the sufferer’s Amazon yarn. Although Amazon will ship an e mail notifying the sufferer of the acquisition, the e mail would possibly per probability moreover merely be ignored or the particular person would possibly per probability moreover merely lose think about Amazon. Alternatively, attackers can moreover delete units already throughout the yarn looking cart.

- Tampering with an specific particular person’s beforehand linked calendar so that you could add, cross, delete, or modify occasions.

- Impersonate skills or launch any talent of the attacker’s different. This, in flip, would possibly per probability moreover permit attackers to assemble passwords and private recordsdata.

- Retrieve all utterances made by the sufferer. The train of what the researchers name a “conceal assault,” an adversary can intercept instructions and retailer them in a database. This is able to per probability moreover permit the adversary to extract inside most recordsdata, bag recordsdata on outdated skills, and infer particular person habits.

The researchers wrote:

With these checks, we demonstrated that AvA may even be outdated to offer arbitrary instructions of any type and dimension, with optimum outcomes—in utter, an attacker can retain a watch on tidy lights with a 93% success worth, effectively clutch undesirable units on Amazon 100% of the occasions, and tamper [with] a linked calendar with 88% success worth. Superior instructions that want to be acknowledged exactly of their entirety to be successful, resembling calling a telephone amount, personal an practically optimum success worth, on this case 73%. Furthermore, outcomes proven in Desk 7 show the attacker can effectively dwelling up a Educate Masquerading Assault by contrivance of our Conceal Assault talent with out being detected, and all issued utterances may even be retrieved and saved throughout the attacker’s database, particularly 41 in our case.