By Chris Vallance

Know-how reporter

A model recent chatbot has handed one million clients in decrease than every week, the problem in the back of it says.

ChatGPT was publicly launched on Wednesday by OpenAI, a specific person-made intelligence evaluate agency whose founders integrated Elon Musk.

Nonetheless the corporate warns it might perhaps create problematic solutions and point out biased behaviour.

Launch AI says or now not it is “wanting to rep shopper suggestions to help our ongoing work to toughen this system”.

ChatGPT is mainly essentially the most up-to-the-minute in a collection of AIs which the agency refers to as GPTs, an acronym which stands for Generative Pre-Professional Transformer.

To design the gadget, an early mannequin was gorgeous-tuned via conversations with human trainers.

The gadget additionally realized from access to Twitter recordsdata in line with a tweet from Elon Musk who’s now not any longer section of OpenAI’s board. The Twitter boss wrote that he had paused access “for now”.

The implications possess impressed many who’ve tried out the chatbot. OpenAI chief govt Sam Altman revealed the stage of curiosity inside the unreal conversationalist in a tweet.

The problem says the chat format allows the AI to acknowledge “be aware-up questions, admit its errors, bother fallacious premises and reject imperfect requests”

A journalist for know-how information construct of abode Mashable who tried out ChatGPT reported it is exhausting to tag the model into saying offensive points.

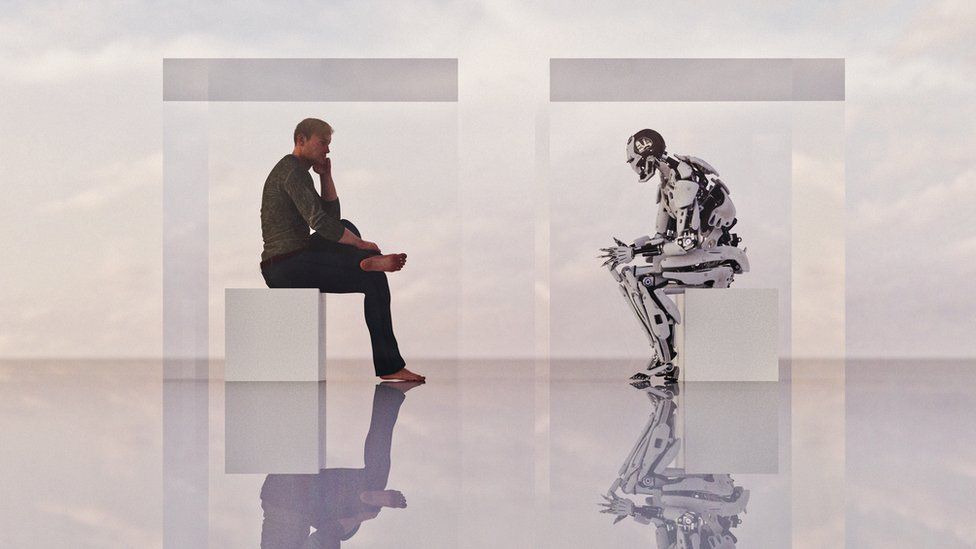

Picture supply, Getty Images

Mike Pearl wrote that in his have assessments “its taboo avoidance gadget is barely full”.

Nonetheless, OpenAI warns that “ChatGPT usually writes plausible-sounding nonetheless fallacious or nonsensical solutions”.

Coaching the model to be additional cautious, says the agency, causes it to say no to acknowledge questions that it might perhaps acknowledge precisely.

Quickly puzzled by the BBC for this textual content, ChatGPT revealed itself to be a cautious interviewee appropriate of expressing itself clearly and precisely in English.

Did it ponder AI would rob the roles of human writers? No – it argued that “AI techniques love myself can help writers by offering options and ideas, nonetheless inside the kill it is as a lot because the human creator to create the final product”.

Requested what typically is the social affect of AI techniques similar to itself, it acknowledged this was “exhausting to foretell”.

Had it been skilled on Twitter recordsdata? It acknowledged it did not know.

Best when the BBC requested a construct a query to about HAL, the malevolent fictional AI from the movie 2001, did it appear scared.

Picture supply, OpenAI/BBC

A construct a query to ChatGPT declined to acknowledge – and even factual a glitch

Although that was probably factual a random error – unsurprising perhaps, given the amount of curiosity.

Its grasp’s converse

Various companies which opened conversational AIs to conventional use, realized they’re going to be persuaded to say offensive or disparaging points.

Many are skilled on gigantic databases of textual expose scraped from the earn, and in consequence they be taught from the worst as well because the supreme of human expression.

Meta’s BlenderBot3 was extremely critical of Designate Zuckerberg in a dialog with a BBC journalist.

In 2016, Microsoft apologised after an experimental AI Twitter bot referred to as “Tay” acknowledged offensive points on the platform.

And others possess realized that usually success in rising a convincing laptop conversationalist brings surprising concerns.

Google’s Lamda was so believable {that a} now-old-fashioned worker concluded it was sentient, and deserving of the rights attributable to a considering, feeling, being, alongside aspect the regular now to not be utilized in experiments in opposition to its will.

Jobs chance

ChatGPT’s talent to acknowledge questions precipitated some clients to shock if it could change Google.

Others requested if journalists’ jobs had been at chance. Emily Bell of the Tow Middle for Digital Journalism unnerved that readers will most seemingly be deluged with “bilge”.

ChatGPT proves my supreme fears about AI and journalism – now not that bona fide journalists will most seemingly get changed of their work – nonetheless that these capabilities will most seemingly be utilized by injurious actors to autogenerate primarily essentially the most astonishing amount of deceptive bilge, smothering actuality

— emily bell (@emilybell) December 4, 2022

The BBC is now not accountable for the expose of exterior websites.Uncover favourite tweet on Twitter

One construct a query to-and-acknowledge construct of abode has already needed to curb a flood of AI-generated solutions.

Others invited ChatGPT to invest on AI’s affect on the media.

General trigger AI techniques, love ChatGPT and others, elevate a choice of moral and societal dangers, in line with Carly Roughly the Ada Lovelace Institute.

Amongst the probably concerns of jam to Ms Form are that AI might presumably perpetuate disinformation, or “disrupt current establishments and corporations and merchandise – ChatGDT will most seemingly be in a declare to jot down a satisfactory job utility, faculty essay or grant utility, for example”.

There are additionally, she acknowledged, questions spherical copyright infringement “and there are additionally privateness concerns, provided that these techniques continuously incorporate recordsdata that’s unethically gentle from internet clients”.

Nonetheless, she acknowledged they might presumably presumably moreover ship “attention-grabbing and as-yet-unknown societal advantages”.

ChatGPT learns from human interactions, and OpenAI chief govt Sam Altman tweeted that these working inside the self-discipline even possess nice to be taught.

AI has a “lengthy method to modify, and gigantic ideas but to seem. We will have the choice to stumble alongside the plan, and be taught rather a lot from contact with actuality.

“This is ready to presumably usually be messy. We will have the choice to usually create the reality is injurious selections, we’ll usually possess moments of transcendent growth and fee,” he wrote.