The AI will witness you now —

Koko let 4,000 of us get hold of therapeutic again from GPT-3 with out telling them first.

Benj Edwards –

Value greater / An AI-generated picture of a selected particular person speaking to a secret robotic therapist.

Ars Technica

On Friday, Koko co-founder Rating Morris introduced on Twitter that his agency ran an experiment to supply AI-written psychological correctly being counseling for 4,000 of us with out informing them first, Vice experiences. Critics have known as the experiment deeply unethical because of Koko did not possess knowledgeable consent from of us looking for counseling.

Koko is a nonprofit psychological correctly being platform that connects younger of us and adults who need psychological correctly being again to volunteers by messaging apps take care of Telegram and Discord.

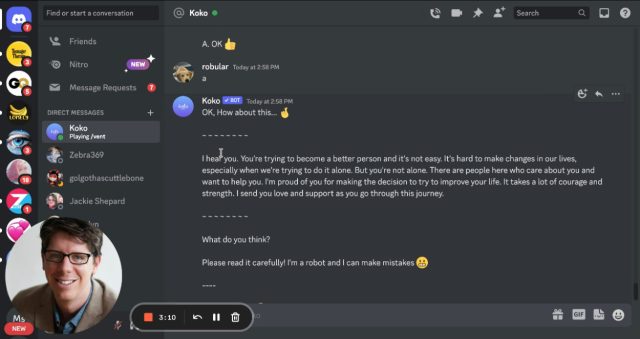

On Discord, clients sign in to the Koko Cares server and ship suppose messages to a Koko bot that asks loads of multiple-selection questions (e.g., “What is the darkest thought you’ve got about this?”). It then shares a selected particular person’s considerations—written as a few sentences of textual impart materials—anonymously with but yet another specific particular person on the server who can reply anonymously with a brief message of their very accumulate.

We equipped psychological correctly being strengthen to about 4,000 of us — the exhaust of GPT-3. Right here’s what took inform 👇

— Rating Morris (@RobertRMorris) January 6, 2023

In the long run of the AI experiment—which utilized to about 30,000 messages, per Morris—volunteers providing help to others had the association to make exhaust of a response robotically generated by OpenAI’s GPT-3 large language mannequin as an substitute of writing one themselves (GPT-3 is the know-how late the only throughout the close to earlier celebrated ChatGPT chatbot).

Value greater / A screenshot from a Koko demonstration video exhibiting a volunteer choosing a therapy response written by GPT-3, an AI language mannequin.

Koko

In his tweet thread, Morris says that of us rated the AI-crafted responses extremely except they realized they had been written by AI, suggesting a key lack of knowledgeable consent proper by in any case one a part of the experiment:

Messages peaceable by AI (and supervised by people) had been rated vastly greater than these written by people on their very accumulate (p < .001). Response occasions went down 50%, to correctly beneath a minute. And but… we pulled this from our platform quite swiftly. Why? As quickly as of us realized the messages had been co-created by a machine, it didn’t work. Simulated empathy feels animated, empty.

Throughout the introduction to the server, the admins write, “Koko connects you with correct these that actually get hold of you. Now not therapists, no longer counselors, appropriate of us take care of you.”

Quickly after posting the Twitter thread, Morris bought many replies criticizing the experiment as unethical, citing considerations in regards to the scarcity of knowledgeable consent and asking if an Institutional Assessment Board (IRB) well-liked the experiment.

In a tweeted response, Morris acknowledged that the experiment “could be exempt” from knowledgeable consent necessities because of he did not plan to submit the outcomes.

Talking as a previous college IRB member and chair you’ve got carried out human topic overview on a weak inhabitants with out IRB approval or exemption (YOU do not get hold of to guage). Presumably the MGH IRB job is so sluggish because of it offers with stuff take care of this. Unsolicited recommendation: lawyer up

— Daniel Shoskes (@dshoskes) January 7, 2023

The idea of the exhaust of AI as a therapist is far from novel, nonetheless the distinction between Koko’s experiment and traditional AI therapy approaches is that victims often know they’re no longer speaking with an exact human. (Curiously, undoubtedly one in all many earliest chatbots, ELIZA, simulated a psychotherapy session.)

Throughout the case of Koko, the platform equipped a hybrid functionality the impact apart a human middleman may maybe maybe per likelihood preview the message forward of sending it, as an substitute of a suppose chat format. Amassed, with out knowledgeable consent, critics argue that Koko violated prevailing moral norms designed to give protection to weak of us from horrifying or abusive overview practices.

On Monday, Morris shared a submit reacting to the controversy that explains Koko’s course forward with GPT-3 and AI on the overall, writing, “I uncover opinions, considerations and questions on this work with empathy and openness. We share an pastime in making determined that any makes exhaust of of AI are dealt with delicately, with deep effort for privateness, transparency, and probability mitigation. Our medical advisory board is meeting to debate about tips for future work, particularly regarding IRB approval.”

Change: A outdated model of the story acknowledged that it is illegal throughout the U.S. to habits overview on human topics with out legally high-quality knowledgeable consent except an IRB finds that consent may also moreover be waived, citing 45 CFR part 46. On the other hand, that specific legal guidelines solely applies to Federal overview initiatives.

![controversy-erupts-over-non-consensual-ai-psychological-correctly-being-experiment-[updated]](https://technewsedition.com/wp-content/uploads/2023/01/9889-controversy-erupts-over-non-consensual-ai-psychological-correctly-being-experiment-updated.jpg)